There is an important concept that runs through our product that I call explainability. I use the term a lot with our product and engineering teams to emphasize our data-first approach and our will to stand behind our data. The term is, well, self-explanatory, but I want to dig into it and show how it helps security professionals.

To me, I don’t know any other way of building a product that customers will truly trust other than to explain how conclusions and rankings were determined. We are as much a deep data as a security company, meaning that the CyCognito platform presents high-quality and actionable data to its users in the domain of cybersecurity. Let’s dig in.

What Is Explainability?

In a way, explainability is the story behind the data. And stories are often the best way to explain things to people. The stories are composed of information and connections, shown visually.

So first, what kinds of data do you need to tell the story? I think it’s data about data. This kind of data includes things like:

- When was the data collected?

- What are the sources?

- How certain is the system about the accuracy of the data?

- How was it detected?

- How can it be reproduced?

When you think about it, not too different from the well-known who, what, when, where, and why of a story.

This data is pulled from a variety of different sources, including scanning modules, web crawling, third-party databases, and threat intelligence feeds. It then undergoes sophisticated validation, analysis, processing, aggregation, and automatic decision-making on a massive scale.

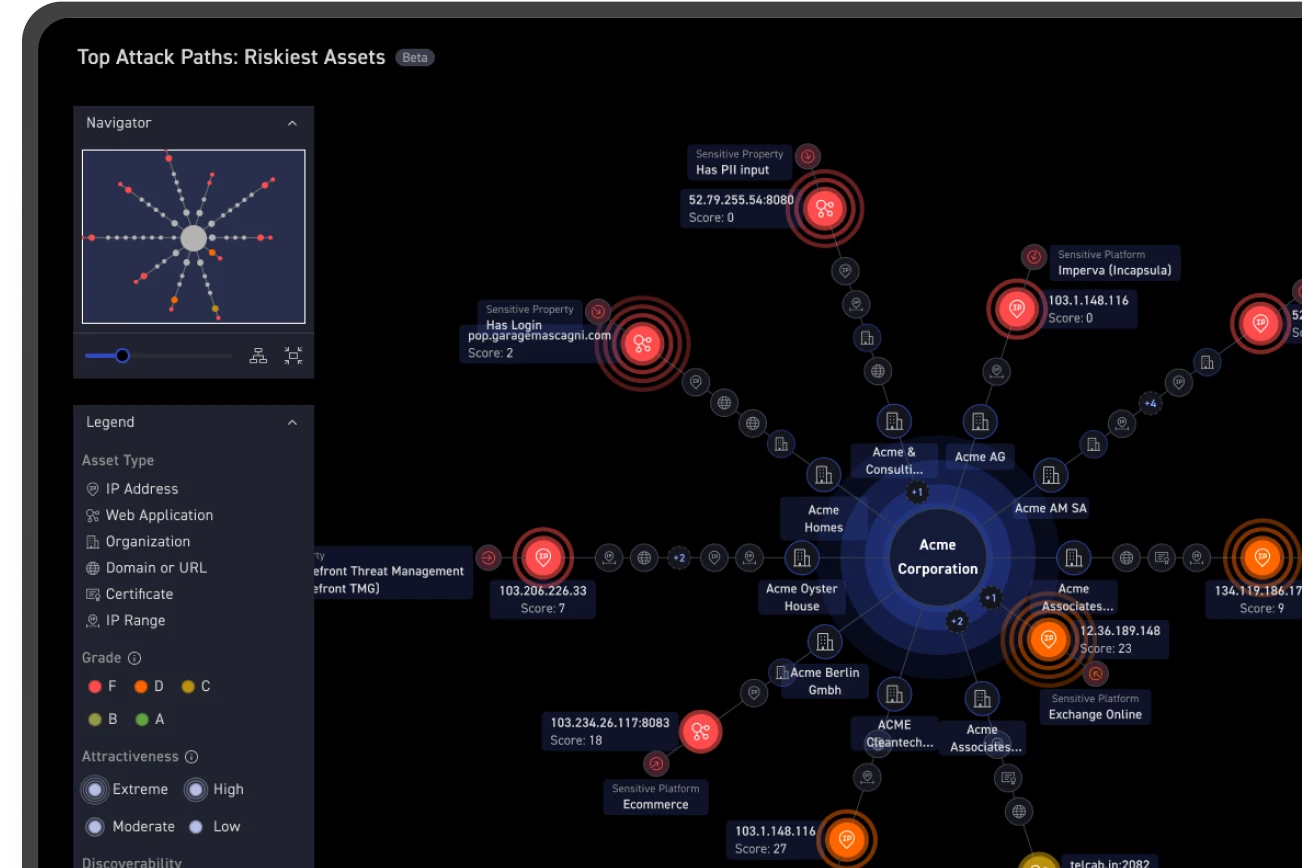

Second, in order to be clear, we want to visualize all our explanations in a useful and convenient way, especially in complex situations such as discovery. Sometimes, a bunch of fields or a simple table can be enough, but often, much more complex visualizations are needed. For example, the discovery path utilizes complex graph algorithms in order to explain how a certain asset is related to the organization and can be discovered by an attacker. It necessitates a much more sophisticated visualization that illustrates the underlying complexity.

I believe an automated product that provides critical, multi-layered, and actionable information regarding an organization’s exposure, business risk, and critical attack vectors is much more useful. Being accurate is critical, but explaining how conclusions were arrived at is equally so.

Explainability in Action

Let’s take a look at two common tasks security teams face. This will bring explainability into sharper focus.

The first common use case for explainability is describing the discovery path. At the heart of our technology lies an ability to discover IT assets based on company name alone. This is a very complex and algorithm-heavy process that requires both AI-based business mapping and subsidiary detection, as well as sophisticated internet-wide crawling. The same process a sophisticated attacker might perform in a targeted attack.

The output of this process is a customer’s exposed asset inventory. Because of this complex, multi-step process, it’s often unclear why and how a certain asset is related. Take this example: a company has a subsidiary that has another subsidiary that owns a domain that points to an IP that has a certificate that is shared with another IP. Whew!

The discovery path tells a story of how the asset was discovered, so the customer can understand why it’s related. It also helps understand how a potential attacker can discover this asset simply by starting from the customer’s main website.

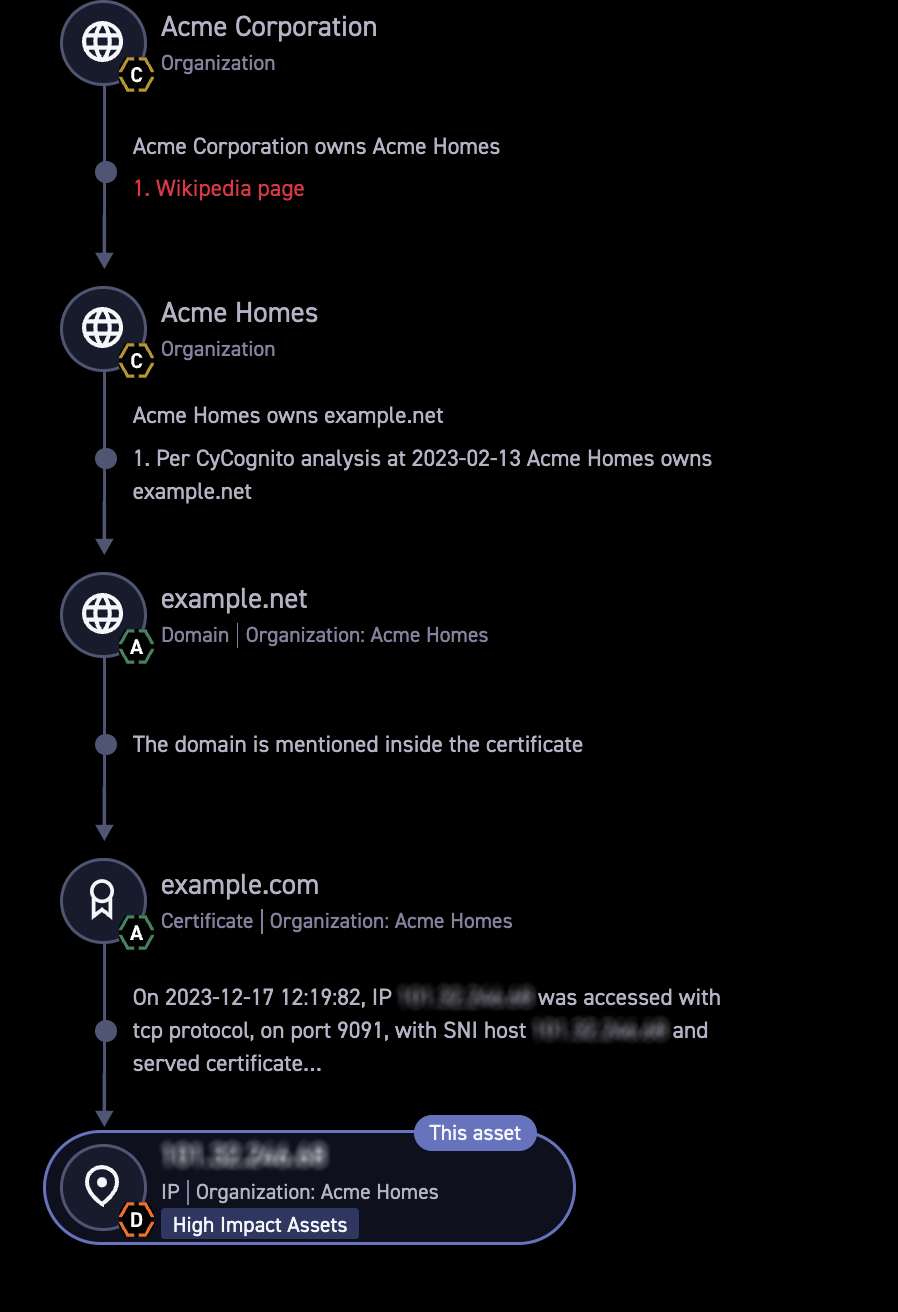

Here’s an example of us explaining our data and the decisions we took in order to come to the relevant conclusions. It takes a very complicated end result – attributing an asset to the attack surface – and breaks the process down into understandable and verifiable steps. It answers the frequent question: Why is this asset in my attack surface? Figure 1 shows an example of this below.

Figure 1. The discovery path shows Acme Corporation subsidiary ownership, domain ownership, and IP address attribution.

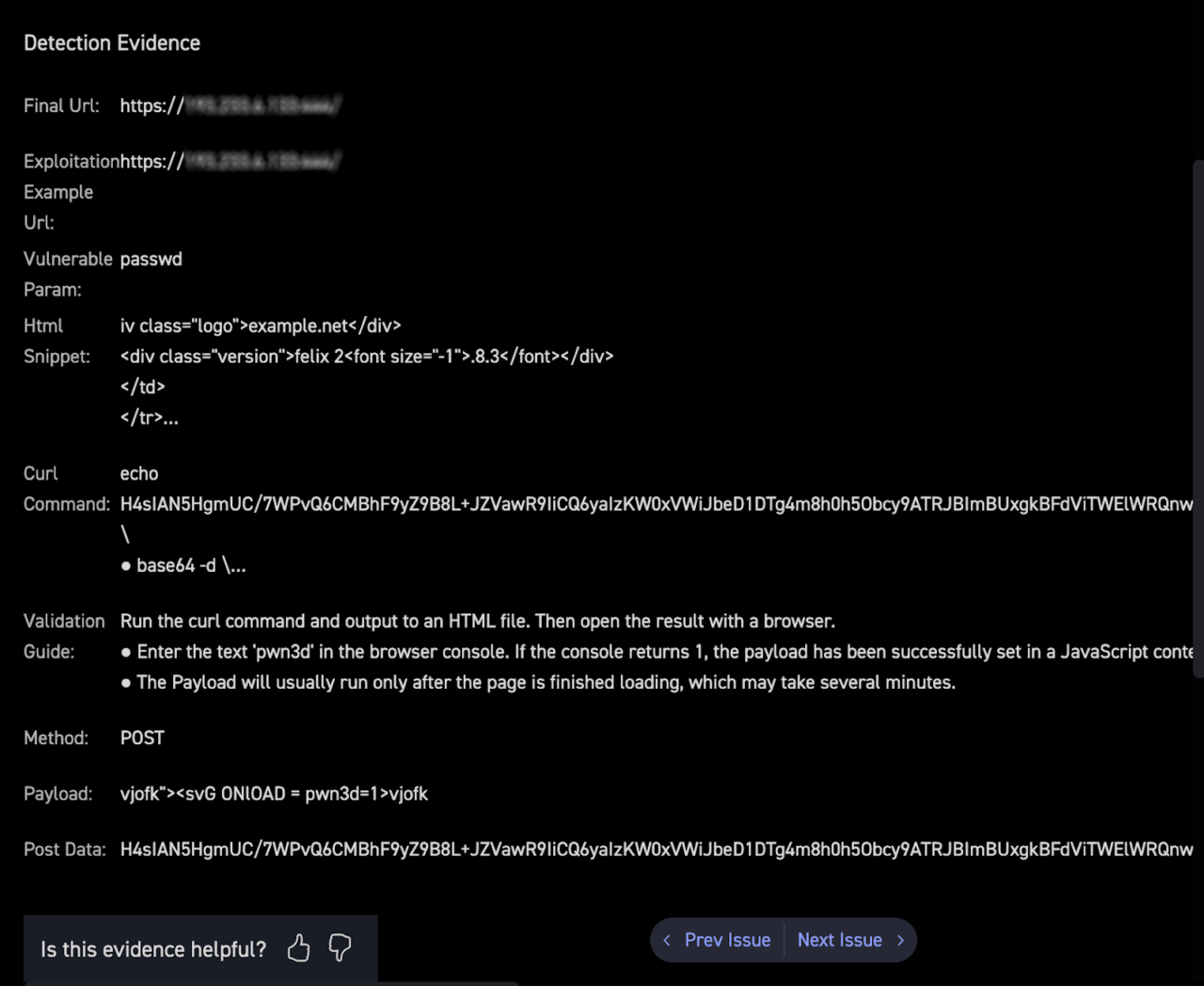

Another use case for explainability is providing evidence of issues. IT, Ops and Dev teams are jaded when it comes to vulnerability reports because of the large volume of false positives they receive every week from a number of security products. Providing evidence of an issue helps them understand it better and trust that it is a real issue.

The CyCognito platform not only presents that the issue was found, but gives detection “evidence,” as shown in Figure 2 below. This type of evidence is especially important for fuzzy application vulnerabilities, such as XSS, SQL Injections, and the like, where it’s important to understand details such as the specific query parameters affected. The detailed evidence allows a much easier validation by the person responsible for remediation.

Figure 2. Technical details of a very specific XSS vulnerability instance provide evidence to the security analyst.

Business Value of Explainability

A byproduct of the security value of explainability is business value. This is manifested in two ways:

Efficiency – A detailed explanation of an issue makes it much more efficient to resolve. Knowing who owns it reduces the time a security analyst spends figuring that out. Complete evidence also reduces the time a security analyst spends gathering needed information.

Trust – As mentioned, IT, Ops, and Dev teams have suffered for years with false positives. Hundreds or even thousands of bogus issues have eroded the trust they have in their security colleagues. Providing detailed evidence in an easily-explained fashion helps to rebuild this trust and make the various teams work better together.

Explainability is a must for security products. With the scale of infrastructures and the number of incidents security, IT and development teams must handle, trust and efficiency are paramount. Presenting data about data, telling a story and visualizing it clearly help do that.